UPCOMING REGULATIONS AFFECTING TRUST & SAFETY IN GAMES

The games industry is facing quite a few new regulations relating to trust and safety. Policymakers have begun to take on the challenge of protecting users from harmful content, and game studios need to pay close attention to these changes to ensure they align with global data protection and content moderation regulations

To become (or remain) compliant with these upcoming regulations, studios will need to turn to more sophisticated safeguards to prevent disruptive behavior before it happens and to provide more visibility into harmful content within their games.

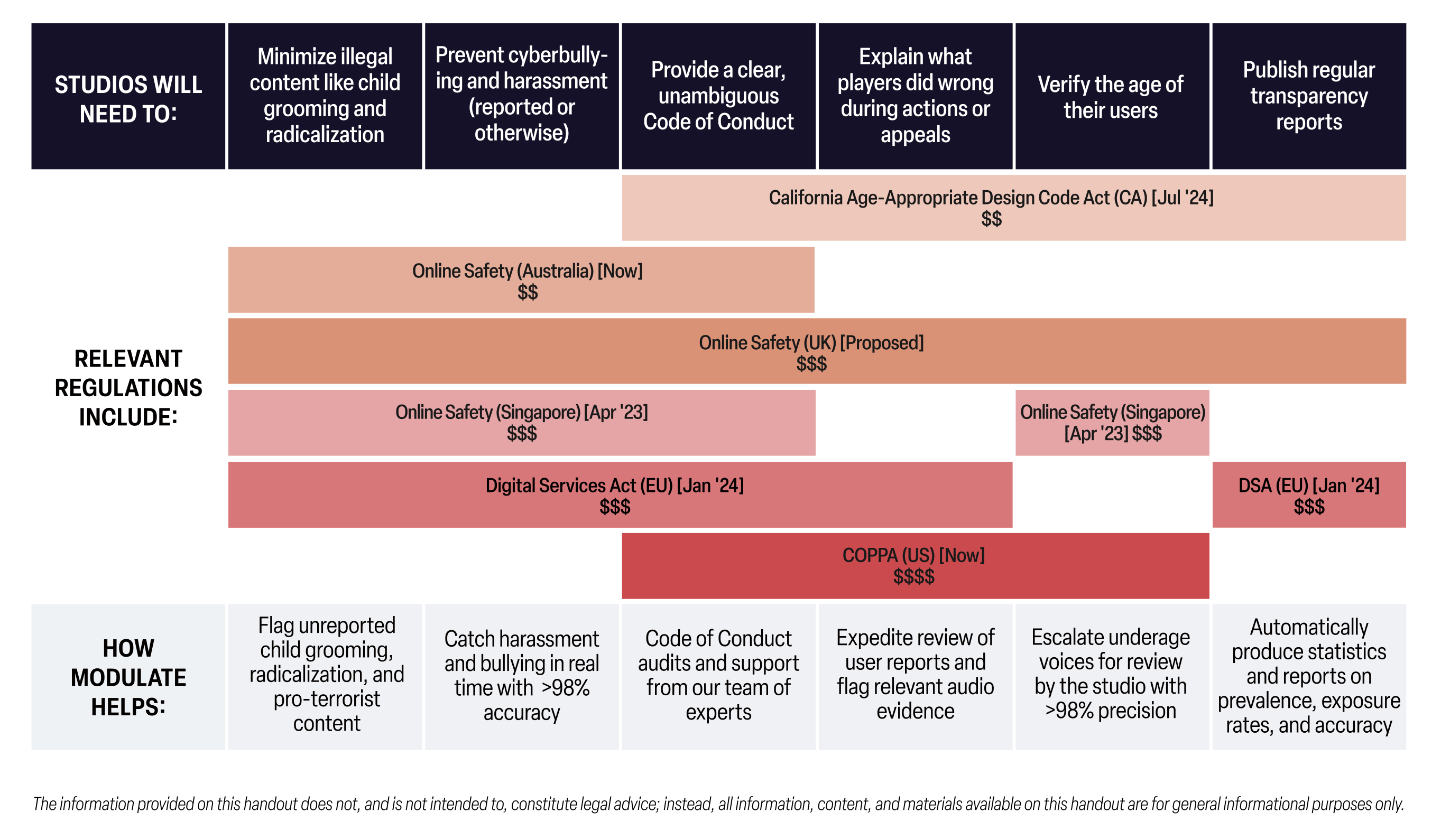

Check out our handy cheat sheet to see the key details and potential impacts of these regulations, along with more details on each below.

COMPLIANCE CHEAT SHEET

HOW MODULATE HELPS

Despite the large number of new regulations and potentially hefty fines to coincide with them, the good news is that most of these regulations share very similar themes and requirements.

The better news is customers using ToxMod, Modulate’s voice-native, proactive moderation platform, are already extremely well-positioned to meet those requirements.

The best news is that we have further expertise from working with other studios on these challenges, and can support you across your wider compliance journey as well, including:

- Catching bad content with 98%+ accuracy across all relevant categories

- Code of Conduct audits and support from our team of experts

- Correlation between player reports and appeals to the corresponding evidence that automatically rules out obvious false reports

- Proactive detection that reduces the harm that studios otherwise lack knowledge of

- Automatic identification of exposures to harm, with robust statistics and reports

KEY UPCOMING REGULATIONS

Children's Online Privacy Protection Act (US)

COPPA is a law which imposes controls on online platforms which are targeted towards kids or have a lot of underage users. Among other requirements, COPPA requires parental consent before platforms can process PII (personally identifiable information) of children under the age of 13.

COPPA imposes significant penalties on noncompliant platforms. Fines can reach roughly $43,000 per impacted child - meaning a platform with millions of underage users could find themselves facing massive penalties indeed.

COPPA is designed specifically to protect children, and does allow for looser interpretation of some obligations when it’s in the best interest of the child. Modulate helps you stay compliant by limiting our collection of PII wherever possible, as well as restricting any data to be used solely for moderation in service of improving the user’s safety.

Digital Services Act (EU)

The Digital Services Act (DSA) is a European law designed to require online platforms to combat harmful and illegal behaviors and foster transparency across the industry. The DSA goes into effect on Jan 1, 2024, after which noncompliant organizations could be fined up to 6% of their annual revenue.

The DSA outlines a variety of obligations, with some of the most notable being a requirement of regular transparency reports to disclose the effectiveness of each platform’s moderation efforts; as well as banning users who repeatedly upload or share illegal content. For platforms which utilize voice chat, identifying this content can be exceedingly difficult, as player reports are generally insufficient to find all such misbehavior. ToxMod can provide unique value here by identifying all the worst behavior across the platform, helping you stay compliant and genuinely improve your community experience.

Online Safety Bill (UK)

Several countries currently have Online Safety Bills implemented or under consideration. The UK’s Online Safety Bill currently remains a proposal, but is expected to be implemented mid-2023. Once implemented, it will require platforms to proactively remove illegal content, provide expansive explanations in their terms of service regarding moderation practices, and limit the risk of underage users accessing adult or otherwise harmful content.

As currently written, the UK Online Safety Bill could impose penalties of up to 10% of the noncompliant organization’s annual revenue.

The UK Online Safety Bill focuses heavily on proportionate risk assessment - requiring platforms to focus their energy where the worst harms are happening. ToxMod assists this by proactively monitoring conversations across the ecosystem, and providing a categorized and prioritized list of the worst harms back to the studio. Our customers are thus able to ensure they are engaging with the most severe content first, and methodically take action against offenders.

GDPR (EU) and CCPA (CA)

GDPR and CCPA are near-identical data protection laws implemented by the EU and the state of California respectively. Both laws require online platforms to be clear to users about how their data is used, as well as providing end users the right to view their data or restrict its usage.

Modulate supports GDPR/CCPA compliance for its customers across the board - ensuring our own data processing is done securely; enabling data subject requests like the right to be forgotten; and de-identifying any data we process to avoid any interactions with personally identifiable information (PII). In addition, Modulate is ISO 27001 certified.

Online Safety Bill (Australia)

Australia also has an Online Safety Bill, which has been passed into law and created the eSafety Commission, an office solely devoted to enforcing online safety standards. The eSafety Commission is empowered to publish expectations for a variety of industries, including game developers, and has published a draft of the guidance for review. These draft expectations outline obligations including the use of automated tools to identify CSAM and other sorts of illegal or severely harmful content, as well as requiring platforms to offer robust reporting options for players.

While these expectations have not yet been finalized, Modulate stands ready to assist and studios looking to proactively ensure their compliance. Our proactive voice moderation software can ensure studios become aware of illegal content even if players don’t report it; and can also augment player reports with substantially more context to help platforms take action efficiently and consistently.

The Age Appropriate Design Act (CA)

California recently passed the Age Appropriate Design Act (AADA), which goes truly into effect on July 1, 2024. The AADA requires online platforms to identify underage users, enable privacy by default for such child accounts, and regularly perform data-privacy risk assessments regarding new features, which must be submitted to the California Privacy Protection Agency (CPPA), a new agency specifically created to enforce this law. Experts expect the CPPA to be quite active once the law takes effect, with violations resulting in fines of up to $7,500 per impacted child.

The AADA explicitly permits processing of data when it is demonstrably in the best interests of the child, which likely includes safety tools like ToxMod. In addition, the recent settlement between the FTC and Epic demonstrated that enforcement agencies aren’t just interested in protecting child data from platforms; they also want to protect it from other users on the platform. As such, ToxMod’s proactive approach is invaluable for its ability to monitor the entire voice chat ecosystem - giving platforms a better understanding of what bad actors might be up to, and especially when they are targeting children, and enabling the platform to respond in less than a minute, resolving the issue and preventing pedophiles and other bad actors from building rapport with these vulnerable users.

DIG DEEPER

Read our full blog post to understand more about these regulations and the impacts they'll have on games studios: Safety and Privacy: How Upcoming Trust & Safety Regulation will Impact the Games Industry

TOXMOD IS GAMING'S ONLY VOICE-NATIVE MODERATION SOLUTION.

Built on advanced machine learning technology and designed with player safety and privacy in mind, ToxMod triages voice chat to flag bad behavior, analyzes the nuances of each conversation to determine toxicity, and enables moderators to quickly respond to each incident by supplying relevant and accurate context.

For more insights and perspectives on the changing world of Trust & Safety in games, sign up for Trust & Safety Lately: